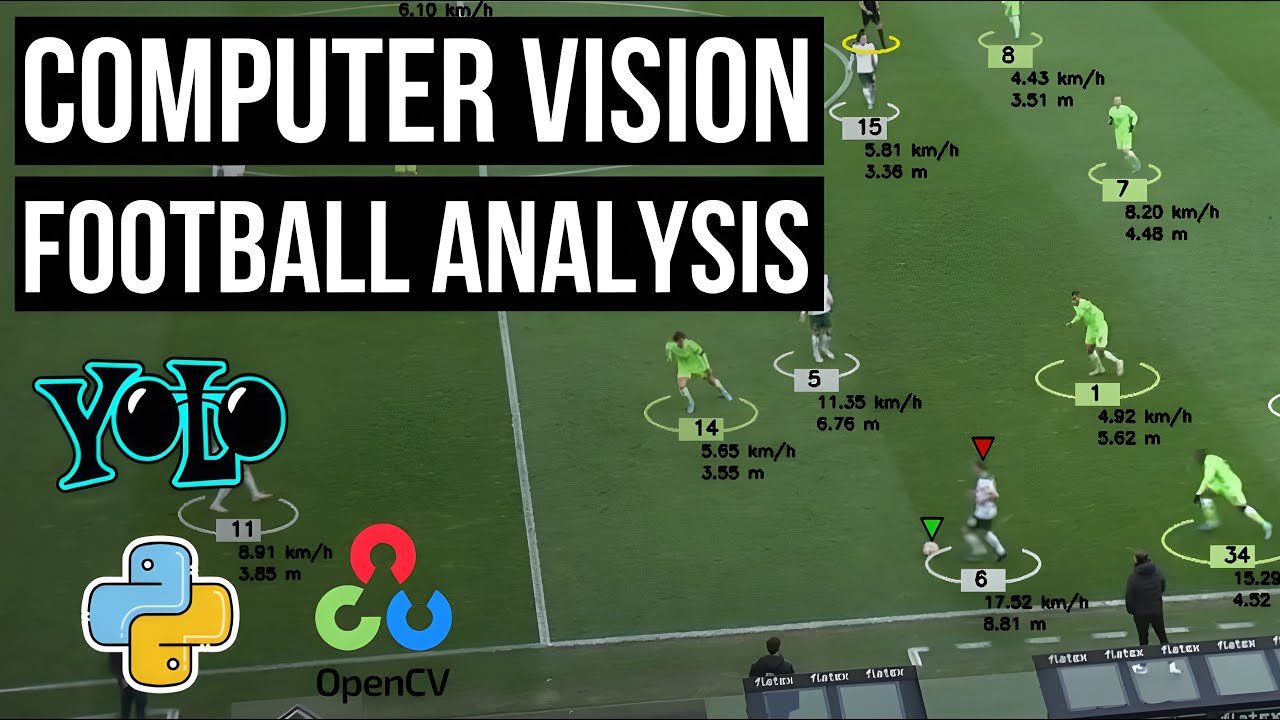

In this video, you’ll learn how to use machine learning, computer vision and deep learning to create a football analysis system. This project utilizes YOlO a state of the art object detector to detect the players, referees and footballs. It also utilizes trackers to track those object across frames. We also train our own object detector to enhance the output of the state-of-the-art models. Additionally, we will assign players to teams based on the colors of their t-shirts using Kmeans for pixel segmentation and clustering. We will also use optical flow to measure camera movement between frames, enabling us to accurately measure a player’s movement. Furthermore, we will implement perspective transformation to represent the scene’s depth and perspective, allowing us to measure a player’s movement in meters rather than pixels. Finally, we will calculate a player’s speed and the distance covered. This project covers various concepts and addresses real-world problems, making it suitable for both beginners and experienced machine learning engineers.

In this video you will learn how to:

1. Use ultralytics and YOLOv8 to detect objects in images and videos.

2. Fine tune and train your own YOLO on your own custom dataset.

3. Use KMeans to cluster pixles and segment players from the background to get the t-shirt color accurately.

4. Use optical flow to measure the camera movement.

5. Use CV2’s (opencv) perspective transformation to represent the scene’s depth and perspective.

6. Measure player’s speed and distance covered in the image.

Datasets:

kaggle Dataset:

Video link used because Kaggle removed the videos from the kaggle dataset above:

Robowflow Football Dataset:

Github Link:

🔑 TIMESTAMPS

================================

0:00 – Introduction

1:19 – Object detection (YOLO) and tracking

1:55:30 – Player color assignment

2:32:00 – Ball interpolation

3:06:25 – Camera movement estimator

3:41:50 – Perspective Transformer

4:05:40 – Speed and distance Estimator

source

One problem which I am facing is my players tracking id gets changed when they enter from one frame to another can anyone help me with this , I am using the same code for my clip

Does anyone have the dataset? Because The dataset from Kaggle has been removed

1:22:33

Hello mr Abdullah

Have question

How process missing of ball in real live not on video

I try using Shai

But its use alot of memory

Is anyone not getting the ball when tracking? Could this be an issue with model training? Nothing wrong with the tracker code on my end.

1:04:15 i am stuck at this step as when i run my main.py it says

ModuleNotFoundError: No module named 'trackers'

even though i have imported the tracker folder and all correctly , its name is there in green.

what to do

bro during tracking process in output video it show the wrong track if like 234,700 for players what to to do

my roboflow api key is not working for downloading the dataset?

Thank you very much

Bro can I used windows for this project?

1:40:00

hello sir. hope you're doing well. i got an error that i tried to solve but since i don't find any solution. may you please help me? here is the error: PS C:Userschrisfootball_analysis> & c:/Users/chris/football_analysis/env/Scripts/python.exe c:/Users/chris/football_analysis/main.py

Traceback (most recent call last):

File "c:Userschrisfootball_analysismain.py", line 87, in <module>

main()

~~~~^^

File "c:Userschrisfootball_analysismain.py", line 26, in main

camera_movement_estimator = CameraMovementEstimator(video_frames[0])

~~~~~~~~~~~~^^^

IndexError: list index out of range

PS C:Userschrisfootball_analysis> & c:/Users/chris/football_analysis/env/Scripts/python.exe c:/Users/chris/football_analysis/main.py

Traceback (most recent call last):

File "c:Userschrisfootball_analysismain.py", line 87, in <module>

main()

~~~~^^

File "c:Userschrisfootball_analysismain.py", line 26, in main

camera_movement_estimator = CameraMovementEstimator(video_frames[2])

~~~~~~~~~~~~^^^

IndexError: list index out of range

PS C:Userschrisfootball_analysis>

great project🎉

Guys I didn't find the dataset in kaggle

for those stuck in google collab where it says epoch 0 then cancels , make sure to change runtime type to gpu

Hello Abdullah, and thank you for the very detailed video; it really inspired me to try it. I want to ask you one thing: now I am at the training phase. I see that your training in Google Colab ended in 10 epochs and in, like, 6 minutes. For me it takes much, much longer; Colab kicks me out in 3 hours, so I started the training on my MacBook Pro M4. It has now been 30 hours and is in epoch 80. Will it be ok if I end the training manually, or should I wait for the system to finish it? Or should I have changed the early stopping parameters? Thank you.

Walter Writes AI Humanizer is the only tool I trust to make AI text sound real and totally not detectable

Can you just send best and last weight as I am unable to train it

I need help cause I do this as my grad project please if you can help just reply or give me an email I will send everything I need to know cause the due date is 15 days far

Unfortunately it looks like the data is no longer there

Hello , how can I integrate this system such that the user can input any video they want and click analyze to get the analysis of their video as an annotated final output generated in this project?

Guys Where are you getting the dataset from, it seems Kaggle has removed it

can some one give me path how to reach this level from zero..highly impressed how you did it thanks

1:12:28

no me funciona el best.pt dice que es algo de models y lo pongo y nada

i got the following error in main.py

tracker = Tracker('models/best.pt')

TypeError: Tracker() takes no arguments/

also in the output_videos folder the output video is not getting saved also i am unable to see the cropped image in that output_videos folder

can someone help me solve this ?

while training it didnot train epoch , training stop on vs code and colab not respond and training file generate but weight file doesnot show that 2 files weight file empty

Starting training for 10 epochs…

Closing dataloader mosaic

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01, num_output_channels=3, method='weighted_average'), CLAHE(p=0.01, clip_limit=(1.0, 4.0), tile_grid_size=(8, 8))

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

0% 0/39 [00:08<?, ?it/s]

hi guys! I want to follow this tutorial using a similar video to make it original and share it. Can i do it? Or do i have to use the one used here?

Hi, after getting your model trained and now have your tactical map with keypoints and objects detected, how do you then capture event data from this map?

i.e pass, shot, goal, distance covered etc…

34:28

24:15 "i am going to blurr my api key" proceeds to show it in VS CODE. Just a ehads up it is visible for us if u didnt know

is yolov5 still the most accurate?

can someone help me to how to implement this project in streamlit with uploading software

going to try take this info and do something similar with basketball tryna watch prime time shaq game with a computer science lens lol

beautiful

What are the extensions you are using for vscode? Specifically the one that predicts what you could be wanting to write next in the line of code.

This tutorial was an absolute masterpiece! 🎨⚽ Your clear explanations and in-depth analysis made the soccer analysis project so much easier to understand. I truly appreciate the effort and expertise you put into this—thank you for sharing your knowledge! 🙌🔥